Chunking in NLP still surviving while modern LLMs evolving at a greater speed.

During the phase of symbolic NLP, natural language processing was entirely rule-based and symbolic. It worked on written grammar rules, dictionaries, and syntax trees. For example., ELIZA, a chatbot that answer based on pattern matching rules.

But the challenge it was not accurate and scalable. Then emerges the phase of statistical NLP, natural language processing based on N-gram language model that predicts the next possible words in the sentence using probability derived Machine Learning and Statistical techniques.

Though it has one big limitation that it cannot predict next word that are not presented in the datasets. This challenge emerged the phase of deep learning era.

The deep learning NLP was based on RNN (Recurrent Neural Networks) that uses sequential learning method. In its architecture, next word prediction happen sequentially. Google Translate, for instance, initially used phrase-based statistical machine translation before moving to deep learning revolution.

With ongoing research to improve deep learning NLP led to the development of Transformers and models like BERT (2018) and GPT (2018), which shifted NLP from handcrafted rules to end-to-end deep learning.

In this blog, we will learn about traditional NLP working in the context of text processing.

Before we begin, following is a natural flow of traditional Natural Language Processing:

Tokenization → PoS Tagging → Chunking → Parsing

Let’s understand each of them in detail…!

What Is Tokenization?

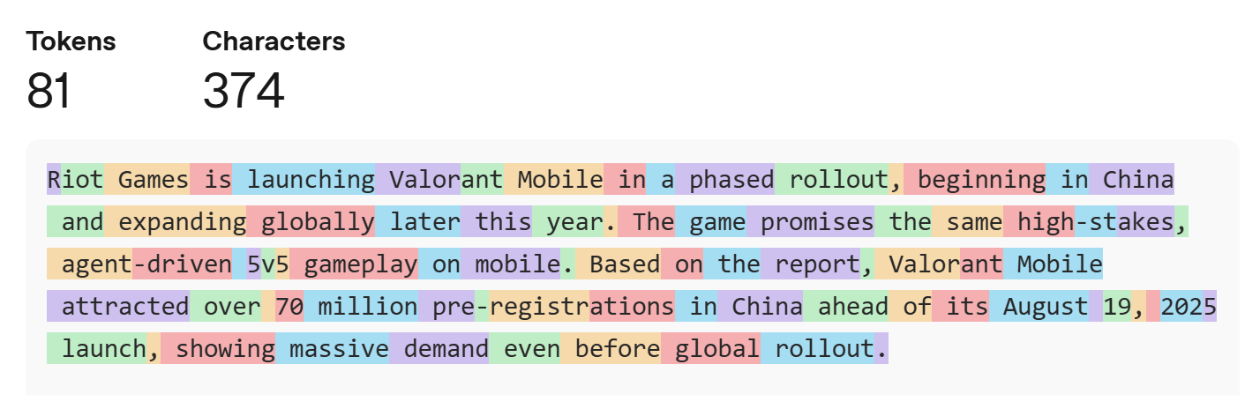

Tokenization is a process of breaking down text into smaller units called tokens that generally corresponds to ~4 characters. Tokenization helps the model to understand each characters (symbol, number, and word) effectively.

In the image, token counts 81 and characters 374 which are highlighted in different colors. Each highlights contribute to making a token with unique IDs.

Further, these tokenized units process for PoS Tagging.

What Is PoS Tagging?

PoS Tagging also known as Part-of-Speech tagging refers to labeling each word in a sentence with its grammatical role, such as noun, verb, adjective, determiner, etc.

Let’s consider this sentence as an example; “The quick brown fox jumps over the lazy dog.” The PoS tag would be as follow.

The/DT → Determiner

quick/JJ – Adjective

brown/JJ – Adjective

fox/NN – Noun (singular)

jumps/VBZ – Verb (3rd person singular present)

over/IN – Preposition

the/DT – Determiner

lazy/JJ – Adjective

dog/NN – Noun (singular)

Basically, PoS Tagging answer to “What role does this word play in the sentence?” Next, chucking process begin.

What Is Chunking In NLP?

Chucking refers to grouping of words in a sentence based on the PoS Tagging. Alternatively, extracting meaningful words and grouping on the basis of shallow phrases.

Let’s consider the same example; “The quick brown fox jumps over the lazy dog.” The chucking result as follow:

[NP The quick brown fox] [VP jumps] [PP over] [NP the lazy dog]

Further, chucking result processed for parsing.

What Is Parsing?

Parsing is the process of analyzing a sentence’s full grammatical structure, typically by building a parse tree that shows how words group into phrases and how phrases connect according to grammar rules.

There are two types of parsing method. First, Consistency parsing and second, Dependency Parsing. The dependency parsing is very popular and widely used in modern NLP such as SpaCy and StandfordNLP.

Why Is Chucking & Parsing Important In NLP?

Chucking and Parsing both help traditional NLP to understand word-to-word relationship and sentence grammar. This makes tasks like machine translation, information extraction, and text summarization more accurate and structured.

Let’s pick one Grammar checker tool – Grammarly! And understand how text processing happen under the hood.

- Tokenization: Breaks your paragraph into words/punctuation.

- POS tagging: Labels each token (noun, verb, adverb, article, etc).

- Chunking: Groups words into meaningful phrases (noun phrases, verb phrases).

- Parsing: Builds a syntax tree or dependency graph to show grammatical relations.

Grammarly uses machine learning and LLM assistance for optimum performance. It uses ML classifiers and LLMs to detect more subtle errors like style, tone, awkward phrasing.

Therefore, Grammarly approaches hybrid technology, a traditional NLP functionality and modern layer of LLM.

Does Traditional NLP Still Exist Today? If Yes, How?

Yes, traditional NLP still exists today, though its role has shifted. Foundational models operate basis on tokenization to function. POS tagging, parsing, lemmatization are still used inside preprocessing pipelines, corpora annotation, and error analysis.

For example, Before GPT models, datasets like Penn Treebank and Universal Dependencies (POS/parse-annotated corpora) were built using traditional NLP.

So, traditional NLP hasn’t died, it has changed into foundation layer for modern AI system.

How Does Modern LLMs Transforming Natural Language Processing?

Modern Large Language Models are trained on massive parameters and datasets, thanks to computational resources.

With dense neural network development, algorithm learns about pattern and relationship between words through self-supervised learning which result in emergent abilities of LLM and making it optimized for variety of use cases.

Be it question and answer, reasoning capabilities, text summarization, text generation, and so on. LLM learn grammar, semantics, and world knowledge directly from billions of text examples.

In addition to this, modern LLMs are capable to handle multiple tasks. A single model can perform multiple tasks – translation, reasoning, coding, and summarization just from prompting. In fact, they are well trained to generate response in human-like tone and language.

That way LLMs are transforming Natural Language Processing at its core.

Conclusion

So, I would say that traditional NLP is still alive and continues to shape foundational models intelligently. Whether training foundational LLMs or building one from scratch, there is still need to combine traditional NLP with deep learning algorithm. Use cases like writing and editing, customer support, healthcare, programming, and Q&A involve hybrid approach.

Well, that’s all in this blog. I hope it helped you learn about chunking in NLP effectively. Thanks for reading 🙂

Frequently Asked Questions

Does chunking in NLP still matter in 2025, or is it outdated?

Chunking still matters, especially in traditional NLP tasks like grammar checking and information extraction. However, modern LLMs learns during training and improve.

Is chunking still used in tools like Grammarly or Google Translate?

Yes, but not completely. Tools like Grammarly still use chunking and parsing in their traditional NLP pipeline, but suggestions are refined using modern machine learning and LLMs for better accuracy.

What is the difference between chunking and parsing in simple words?

Chunking breaks a sentence into smaller phrase groups, while parsing builds a complete grammar tree that shows how all the words are connected.

Do AI models like GPT-4 or Claude actually use chunking?

GPT based models break text into tokens instead of explicit chunks. But the concept of chunking, grouping related words happens implicitly inside the attention layers.

References

ELIZA – Wikipedia

N-gram Language Models – Stanford

Disclaimer: The information written on this article is for education purposes only. We do not own them or are not partnered to these websites. For more information, read our terms and conditions.

FYI: Explore more tips and tricks here. For more tech tips and quick solutions, follow our Facebook page, for AI-driven insights and guides, follow our LinkedIn page.

Bharat Kumar

Bharat is a content editor at The Next Tech for the past 3 years. He is studying Generative AI (GenAI) from Analytics Vidhya and share his learnings by writing on Generative Engines, Large Language Models, and Artificial Intelligence. In addition to his editorial work, Bharat is active on LinkedIn, where he shares bite-sized updates and achievements. Outside work, he’s known as a Silver‑rank Valorant player, reflecting his competitive edge and strategic mindset.

Related Posts

Machine Learning

How Can Startups Streamline Machine Learning Deployment With...

By: Neeraj Gupta, Sat August 23, 2025

Machine learning (ML) proposes startups an aggressive advantage, enabling..

Machine Learning

What Are Emergent Properties In LLMs? Examples & Their ...

By: Bharat Kumar, Mon August 18, 2025

Emergent properties in LLMs are linked with the evolution of Natural Language..

Machine Learning

What Tools And Frameworks Help Startups Deploy ML Models Eff...

By: Neeraj Gupta, Sun August 17, 2025

Building a machine learning model in a lab is exhilarating. You have clean..

Machine Learning

How Do Successful Startups Handle Real-World ML Deployment C...

By: Neeraj Gupta, Sun August 17, 2025

Building a machine learning model in a controlled environment is invigorating,..

Machine Learning

What Most ML Practitioners Get Wrong About Data And Algorith...

By: Neeraj Gupta, Sun August 10, 2025

Suppose you ask most machine learning practitioners how to improve model..

Machine Learning

Why Chasing The Latest ML Algorithms Might Be Wasting Your T...

By: Neeraj Gupta, Sun August 10, 2025

I observe weekly developments in machine learning. Each week, a new model..

Copyright © 2018 – The Next Tech. All Rights Reserved.