Zhipu AI, now rebranded as “Z.ai” with its latest milestone — the launch of GLM 4.5.

Showed at World AI Conference in Shanghai on 29 July 2025, GLM-4.5 is Zhipu’s most advanced model to date, designed for agent-native intelligence, multi-modal reasoning, and cost-effective deployment. It also introduces a lightweight version, GLM-4.5 Air, built for high performance on low-power setups.

But what makes this launch truly disruptive is its open-source licensing and dual-mode reasoning. While challenging not just Chinese competitors like DeepSeek, but even GPT‑4o and Claude 3 on the global stage.

Table of Contents

- What Is GLM-4.5?

- GLM-4.5 vs GLM-4.5 Air

- Key Features Of GLM 4.5 Large Language Model

- Performance Benchmark Of GLM 4.5 And GLM 4.5 Air

- Pricing Comparison: GLM 4.5 vs Deepseek vs Claude 3 vs GPT 4o

- How To Access New GLM 4.5 Model?

- What Developers Can Build Using GLM 4.5?

- What Are The Use Cases Of GLM 4.5?

- Does GLM‑4.5 Have a Support Community?

- In The End

What Is GLM-4.5?

GLM-4.5 is the latest large language model developed by Zhipu AI, a leading Chinese AI company with backing from institutions like Tsinghua University. While earlier GLM models gained attention for their bilingual (English–Chinese) proficiency, GLM-4.5 breaks new ground with:

- 355 billion total parameters (with 32 billion active).

- An “agent-native” design optimized for tools, functions, and workflows.

- Two operational modes — “thinking” and “non-thinking”.

- Full open-source release under MIT/Apache 2.0 licenses.

This model family is designed to power intelligent agents, autonomous decision-making systems, and high-speed inference tasks across diverse environments—from cloud to edge devices.

GLM-4.5 vs GLM-4.5 Air

| Basis | Basis | Description |

|---|---|---|

| Total Parameters | ~355B | ~106B |

| Active Parameters | ~32B | ~12B |

| Reasoning Modes | Thinking / Non-thinking | Thinking / Non-thinking |

| Deployment Target | Enterprise-grade setups | Consumer GPUs / edge devices |

| Speed | ~200 tokens/sec (non-thinking) | ~200 tokens/sec (non-thinking) |

| Cost Efficiency | Lower than GPT-4o & DeepSeek | Extremely low |

| License | MIT / Apache 2.0 | MIT / Apache 2.0 |

GLM-4.5 Air was specifically designed to make AI more accessible. It can run efficiently on just eight NVIDIA H20 GPUs, slashing infrastructure requirements in half compared to rivals like DeepSeek V2.

Key Features Of GLM 4.5 Large Language Model

1. Dual Reasoning Modes

Thinking Mode with high-complexity reasoning, coding, agentic workflows. Non-Thinking Mode with ultra-fast response generation with minimal resource use (~200 tokens/sec).

2. Agentic AI Use Cases

It is tested that GLM 4.5 goes beyond conversation limit and support function calling, tool usage, multi-step task planning, and reasoning pipelines.

3. Free & Open Source

Both versions are released under MIT and Apache 2.0 licenses, boasting it sourceability for general use while being transparent, modifiable, and free to use commercially.

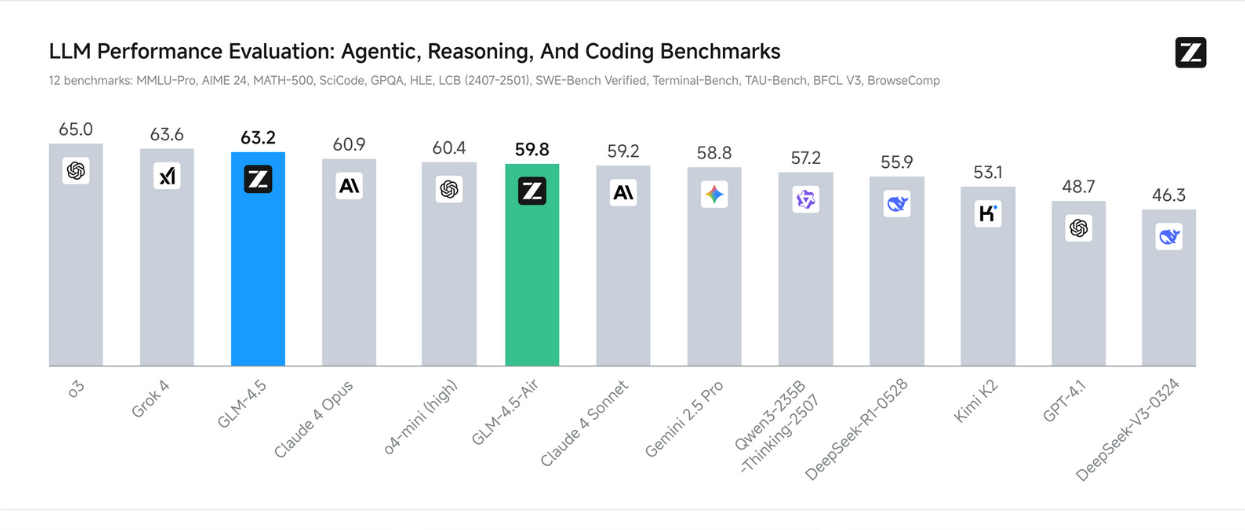

Performance Benchmark Of GLM 4.5 And GLM 4.5 Air

Studying the benchmark comparison chart, it showing how different large language models are perform in the areas of agentic, coding, and reasoning tasks. It highlights Zhipu AI’s new models — GLM-4.5 and GLM-4.5 Air and how they stack up against competitors like GPT-4, Claude 4, Grok, and DeepSeek.

Overall, the model GLM-4.5 ranks 3rd overall, and GLM-4.5 Air ranks 6th, showing impressive performance.

Pricing Comparison: GLM 4.5 vs Deepseek vs Claude 3 vs GPT 4o

| Basis | Input Tokens ($/million) | Output Tokens ($/million) |

|---|---|---|

| GPT‑4o | ~$5–10 | ~$15–20 |

| Claude 3 | ~$25 | ~$75 |

| DeepSeek | ~$0.14 | ~$0.30 |

| GLM-4.5 | ~$0.11 | ~$0.18 |

With such low-budget pricing, it opens serious possibilities for startups, educators, and IT companies to deploy models at scale without burning cloud credits.

How To Access New GLM 4.5 Model?

Access GLM 4.5 model via Hugging Face platform. At GitHub, you can access source code, training methods, and tools. At official website (z.ai) developers explore model API, agent SDKs, and Sandbox environment.

What Developers Can Build Using GLM 4.5?

Real-world applications developers can build using GLM‑4.5 and GLM‑4.5 Air are as followed.

1. Autonomous AI Agents

- Tools like AutoGPT, BabyAGI, or CrewAI-style frameworks.

- Task-driven agents that can plan, reason, and execute via APIs or function calling.

2. Advanced Reasoning Workflows

- Multi-step logic processors (e.g., legal analysis, academic tutoring, math solvers).

- Interactive tutoring systems (especially in STEM subjects).

3. Content Generation Tools

- Blog post writers, copywriting assistants, social media caption generators.

- News summarizers and multilingual content repurposers.

4. RAG Systems

- Smart document search + Q&A from PDFs, databases, and CRMs.

- Enterprise knowledge base assistants.

5. Developer Tools

- AI-based code generation (Python, Java, SQL, etc.)

- Inline documentation writers, bug fixers, or terminal agents.

Also Read: How I Download & Use GLM 4.5 Locally?

What Are The Use Cases Of GLM 4.5?

Healthcare industries can take major benefit for GLM 4.5 for instance AI-powered symptom checker, health advise, and summarization. In Education, AI tutors for math, science, and language learning. In finance, it may act as finance advisor via fine-tune datasets.

Does GLM‑4.5 Have a Support Community?

Yes, Zhipu AI’s GLM 4.5 have a developer and open-source community to share learning and updates. Additional resources such as code examples, fine-tuning scripts, model weight, and instructions for local deployment can be found at official platform.

In The End

The crown of best open source model was previously at Kimi K2, then Qwen3, and now it goes to GLM 4.5 with super contrary achievement. For developers, researchers, and enterprises watching the AI cost curve, GLM-4.5 offers power at a fraction of the price.

The real questions is how would your organization use GLM 4.5 LLM? Share your thoughts in the comment. Thanks for reading 🙂

Disclaimer: The information written on this article is for education purposes only. We do not own them or are not partnered to these websites. For more information, read our terms and conditions.

FYI: Explore more tips and tricks here. For more tech tips and quick solutions, follow our Facebook page, for AI-driven insights and guides, follow our LinkedIn page.

Top 10 News

-

01

Top 10 Deep Learning Multimodal Models & Their Uses

Tuesday August 12, 2025

-

02

10 Google AI Mode Facts That Every SEOs Should Know (And Wha...

Friday July 4, 2025

-

03

Top 10 visionOS 26 Features & Announcement (With Video)

Thursday June 12, 2025

-

04

Top 10 Veo 3 AI Video Generators in 2025 (Compared & Te...

Tuesday June 10, 2025

-

05

Top 10 AI GPUs That Can Increase Work Productivity By 30% (W...

Wednesday May 28, 2025

-

06

[10 BEST] AI Influencer Generator Apps Trending Right Now

Monday March 17, 2025

-

07

The 10 Best Companies Providing Electric Fencing For Busines...

Tuesday March 11, 2025

-

08

Top 10 Social Security Fairness Act Benefits In 2025

Wednesday March 5, 2025

-

09

Top 10 AI Infrastructure Companies In The World

Tuesday February 11, 2025

-

10

What Are Top 10 Blood Thinners To Minimize Heart Disease?

Wednesday January 22, 2025