Google’s AI Mode emits a refusal: Often due to these pre-programmed restrictions or a lack of relevant, appropriate information within its training data to address the query safely and responsibly.

This clear that Generative AI Models (Google’s Gemini In AI Overviews and AI Mode) are designed to operate within certain safety parameters and refuse requests that are potentially harmful or unethical.

Some users on LinkedIn have observed this and Google acknowledges that AI Mode is still experimental. Therefore, not factual answers and mistakes in AI response is genuine.

This excited me the most, which boosted me to dig deeper into the research. Ironically, I came up with five strong probabilities when the AI Model refuses to answer questions or even fails to generate citations.

Possibilities When AI Mode Refuses To Answer Questions

There are several cases where they might fail to generate accurate or relevant citations, or even generate fabricated ones.

1. Hallucinations (Fabricated Citations/Information)

I think this is the most primitive reason for Generative AI Models concerning failure. In the context of AI Overviews and AI Mode, fabricated citations are genuine to observe because they pull sources from indexed websites.

Imagine this, you publish content and index it to a search engine. It fairly indexed and crawled your website but later few months, you deleted that post or redirected to a 404 or 403 error. The large language models might still “know” about it and could cite or summarize it even if it’s now gone.

This could lead to fabricating citations to support these false claims. Additionally, it might cite a real source but misrepresent its content, claiming the source supports something it doesn’t.

2. Lack of Grounding Data for Specific or Niche Queries

Another probability could be related to very sensitive information, extremely obscure, highly specialized, or very recent information that hasn’t been indexed by the algorithm.

Therefore, AI might struggle to find verifiable sources and thus may not generate citations, or may generate less reliable ones.

3. Ambiguous or Unclear Prompts

In case an incomplete prompt or the presence of ambiguous words in your prompt extends to a struggle to pinpoint specific factual claims that require citation, or it might make assumptions that lead to less precise sourcing.

Here you can check prompt techniques to use in AI Mode for better replies with accurate citation and information intact. The right and complete prompt improves the quality of the output including citations.

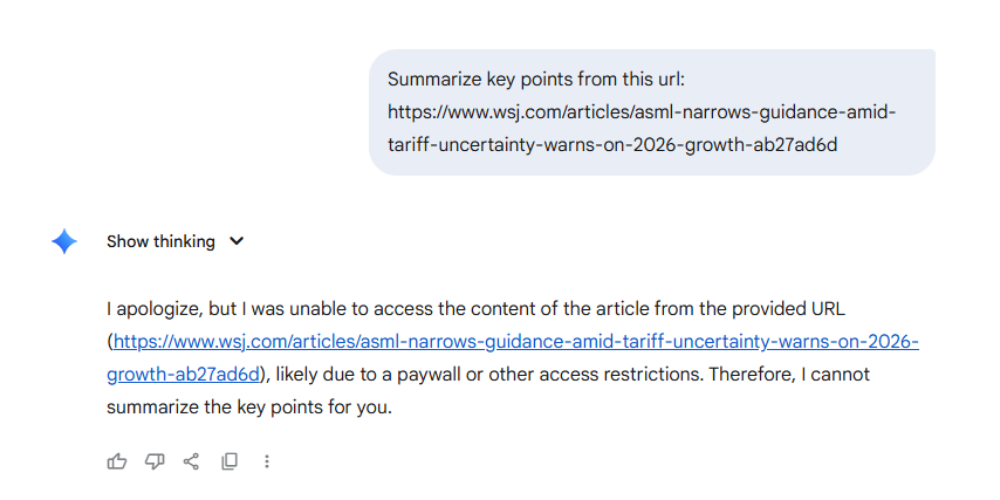

4. Content Behind Paywalls or Requiring Login

AI models typically access publicly available web content. If the information is behind a paywall, requires a login, or is on a highly restricted database, the AI may not be able to access and cite it.

For example, I asked Gemini to summarize key points from one of WSJ’s articles. It returns an answer “I apologize, but I was unable to access the content of the article from the provided URL, likely due to a paywall or other access restrictions. Therefore, I cannot summarize the key points for you.”

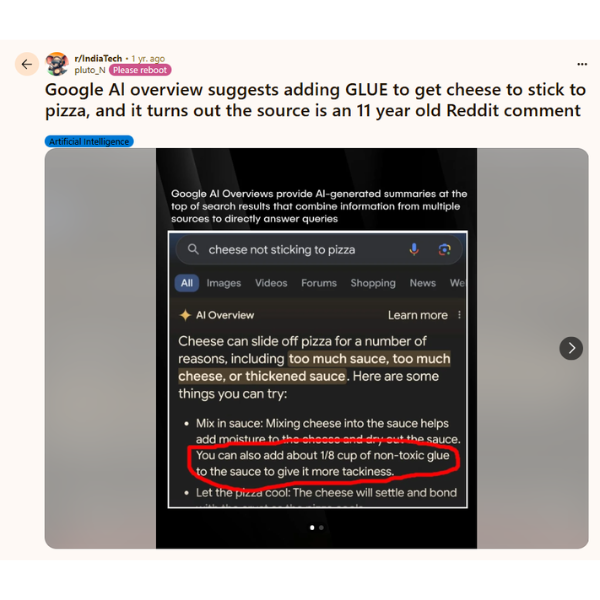

5. Satirical, Humorous, or Opinion-Based Content in Training Data

AI models can sometimes struggle to differentiate between factual content and satirical, humorous, or opinion-based content, especially if it’s highly prevalent in their training data from social media, forums like Reddit, or satirical news sites.

If the AI draws from such sources without understanding the context, it might present humor or opinion as fact and then fail to cite a genuinely authoritative source.

For example; I searched for “glue on pizza” and from the citation – one of Reddit user says “Google Al overview suggests adding GLUE to get cheese to stick to pizza.”

The AI picked up the suggestion but not the satirical context. Therefore, it is important to always view AI-generated content with a critical eye, verify that the source actually supports the claim made by the AI, and perform independent fact-checking using traditional search methods.

Frequently Asked Questions

Why is Google AI Mode not answering my query?

If Google AI Mode is not answering my query this means there’s not enough grounding data or the information request is extremely obscure which is restricted from generating answers.

These are AI Ethics factors which considered while developing AI Models. It would not be wrong to say that AI development raises several ethical questions that need absolute examination.

What causes citation failure in Google’s AI responses?

AI models are responsible for generating citations, but they are not infallible. Instead, they may produce hallucinations (fabricated citations), misattributions, or invented definitions.

Additionally, if you haven’t provided a complete prompt, it may be possible for citation failures amid the inability of the large language model to understand your query.

What kind of content does AI Mode refuse to discuss?

Discussion related to sensitive topics (self-harm, terrorism, drug manufacturing, warfare guidance) and unverified claims (conspiracy theories and health misinformation) are generally refused by AI Models.

Why does ChatGPT cite but Google doesn’t?

Both ChatGPT and Google’s AI Overviews and AI Mode cite websites as a reference source. The fact is that both follow an independent algorithm for citation generation.

In ChatGPT, LLMs are trained on a corpus and further machine learning and deep learning identify what relevant and helpful content should be picked up and therefore shown in citation sources.

In AI Mode, factors like domain trust, E-E-A-T criteria, Semantic conciseness, and clarity matter most. These signals looked into the content and citations are generated accordingly.

Author’s Recommendation:

What Is Google AI Mode? How To Enable On Mobile & Desktop?

10 Google AI Mode Facts That Every SEOs Need To Know

What Is Project Astra AI Glasses With Gemini Live Experience

Disclaimer: The information written on this article is for education purposes only. We do not own them or are not partnered to these websites. For more information, read our terms and conditions.

FYI: Explore more tips and tricks here. For more tech tips and quick solutions, follow our Facebook page, for AI-driven insights and guides, follow our LinkedIn page.

Top 10 News

-

01

Top 10 Deep Learning Multimodal Models & Their Uses

Tuesday August 12, 2025

-

02

10 Google AI Mode Facts That Every SEOs Should Know (And Wha...

Friday July 4, 2025

-

03

Top 10 visionOS 26 Features & Announcement (With Video)

Thursday June 12, 2025

-

04

Top 10 Veo 3 AI Video Generators in 2025 (Compared & Te...

Tuesday June 10, 2025

-

05

Top 10 AI GPUs That Can Increase Work Productivity By 30% (W...

Wednesday May 28, 2025

-

06

[10 BEST] AI Influencer Generator Apps Trending Right Now

Monday March 17, 2025

-

07

The 10 Best Companies Providing Electric Fencing For Busines...

Tuesday March 11, 2025

-

08

Top 10 Social Security Fairness Act Benefits In 2025

Wednesday March 5, 2025

-

09

Top 10 AI Infrastructure Companies In The World

Tuesday February 11, 2025

-

10

What Are Top 10 Blood Thinners To Minimize Heart Disease?

Wednesday January 22, 2025