Another gracious Large Language Model developed by Chinese AI company, Zhipu AI introduced two advanced model to date. The GLM 4.5 and GLM 4.5 Air are said to be the most intelligent model for use cases like reasoning, coding, and agentic use case.

The model is not currently available as a software to download. Developers can access through its official website, Hugging Face or Github repo.

In between, I figure out the way to download GLM 4.5 and run locally on your computer. Yes, you heard it right!

This article show you step by step to access GLM 4.5 download using python. By the end, you feel confident and able to run GLM 4.5 model locally on your computer.

Critical System Requirements

- Python: 3.9 or higher.

- PIP: Latest version for proper dependency resolution.

- VRAM: At least 16GB recommended for smooth performance.

- GPU Required: vLLM only supports CUDA GPUs (NVIDIA RTX 30/40 series).

Table of Contents

Step 1 – Download GLM 4.5 Model From Source Forge

You can download GLM 4.5 from its official SourceForge project page. Once downloaded and extracted, your project folder will include

- .github/ folder

- example/ folder

- inference/ folder

- resources/ folder

- .gitignore file

- requirements.txt

- .pre-commit-config.yaml

- License and Readme file

Step 2 – Install Python 3.9

The GLM 4.5 local setup uses modern Python libraries like transformers, vllm, and accelerate, which require Python 3.9 or later.

- Go to official Python.org to download the version.

- Download the Windows installer file.

- During installation, check the box that says “Add Python to PATH”.

Step 3 – Install Required Dependencies

In your terminal, navigate to the project folder and run:

pip install -r requirements.txt

After installing necessary files, run this:

pip install -U vllm –pre –extra-index-url https://wheels.vllm.ai/nightly

Note: This enable the streaming support for vllm.

Step 4 – Start the vLLM Model Server

Start the core vllm model server that Zhipu AI internally use. This launches a local OpenAI-compatible API server on http://127.0.0.1:8000

python -m vllm.entrypoints.openai.api_server –model THUDM/glm-4-5

Note: If the model isn’t pre-downloaded, vLLM will fetch it automatically from Hugging Face.

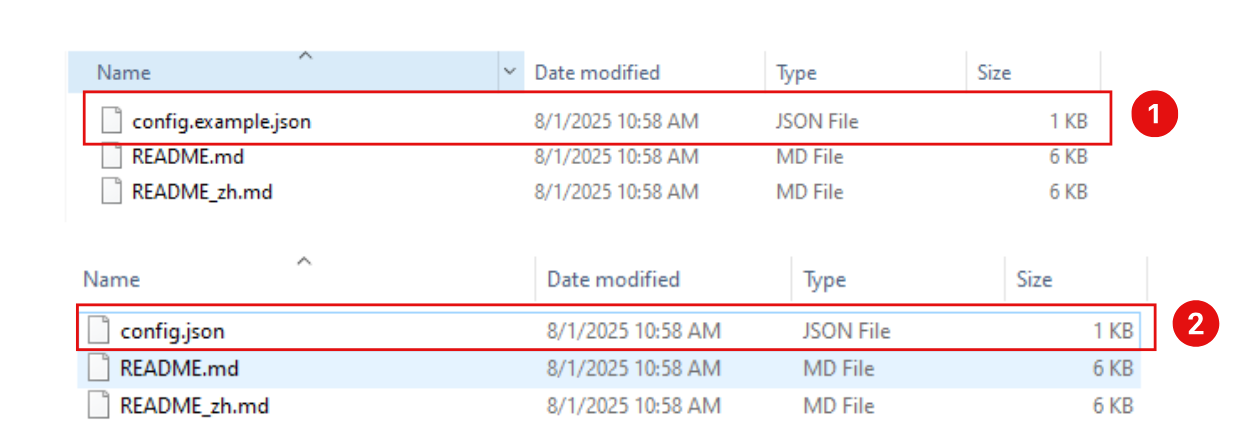

Step 5 – Rename “config.example.json” file

Inside the Example folder, rename “config.example.json” to “config.json” and no edit in the file required.

Step 6 – Run Inference

That’s all. Now run the inference with the following command. You’ll be prompted to enter text, and GLM 4.5 will respond directly in the terminal.

python inference/trans_infer_cli.py

Note: Running GLM 4.5 locally gives you full control over inference, privacy, and customization.

Why GLM 4.5 Is Not Compatible With Python 3.13?

If you are trying to run GLM 4.5 or GLM 4.5 Air model on Python 3.13 version, it likely fail because it was released in 2012 and lacks async/await syntax, missing critical libraries and syntax features.

- Zhipu AI’s GLM 4.5 model comprises of higher libraries like transformers requires python above 3.8 and accelerate, vllm, sglang requires above 3.8 as well.

- According to Github vLLM officially supports Python 3.8, 3.9, 3.10, 3.11, and 3.12.

- Running pip install sglang in Python 3.13 leads to errors like: TypeError: urlopen() got an unexpected keyword argument ‘cafile’

What Developers Can Build Locally Using GLM 4.5 Model?

A range of model development is possible using intelligent GLM 4.5 model which are mentioned below.

- Private Coding Assistants: Generate code snippets, debug functions, explain unfamiliar code, and conversational chatbot.

- Content generation tool: Write SEO blogs, summarize news, and generate social media captions or ad copy.

- Thinking Assistants: Extract action items from notes, expand bullet points into full paragraphs or rewrite text in different tones.

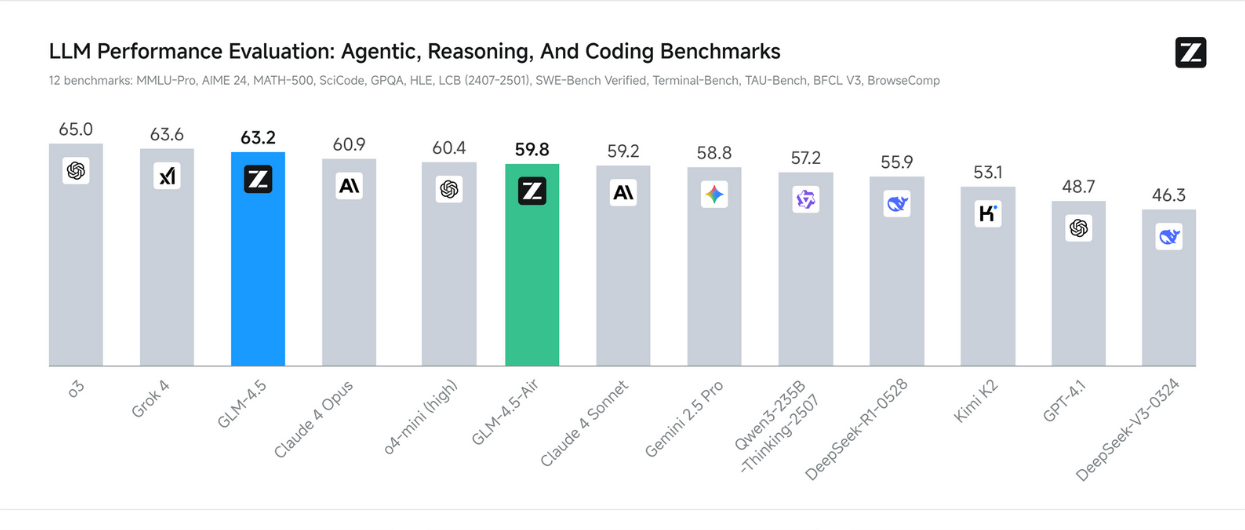

GLM 4.5 Benchmarks

The GLM series (General Language Model) at its early stage have gained widespread popularity, more than 700,000 developers use this model.

Z.ai new model GLM 4.5 break the record in competition with prevailing LLMs. In overall performance, across 12 benchmarks including 3 for agentic tasks, 7 for reasoning, and 2 for coding — GLM 4.5 ranks 3rd.

The release of GLM 4.5 and GLM 4.5 Air will disrupt the industry likely with its record-breaking performance in the filed of coding, reasoning, and agentic tasks.

👉 Check this conversation with Zhipus AI’s GLM 4.5 model

Frequently Asked Questions

Who developed GLM 4.5 model?

Zhipu AI, a Chinese AI research company developed this model.

Which is the best LLM model for coding?

GPT-4 and Claude 3 Opus are considered top performers in coding benchmarks. But, GLM 4.5 is giving tough competition at par.

Who beats DeepSeek R1 and Kimi K2 model?

GLM 4.5 outperforms both DeepSeek R1 and Kimi K2 in several reasoning and agentic benchmarks.

Can Zhipu AI GLM 4.5 work autonomously?

Yes, GLM 4.5 supports agentic workflows and can perform tasks autonomously when integrated properly.

Disclaimer: The information written on this article is for education purposes only. We do not own them or are not partnered to these websites. For more information, read our terms and conditions.

FYI: Explore more tips and tricks here. For more tech tips and quick solutions, follow our Facebook page, for AI-driven insights and guides, follow our LinkedIn page.

Top 10 News

-

01

Top 10 Deep Learning Multimodal Models & Their Uses

Tuesday August 12, 2025

-

02

10 Google AI Mode Facts That Every SEOs Should Know (And Wha...

Friday July 4, 2025

-

03

Top 10 visionOS 26 Features & Announcement (With Video)

Thursday June 12, 2025

-

04

Top 10 Veo 3 AI Video Generators in 2025 (Compared & Te...

Tuesday June 10, 2025

-

05

Top 10 AI GPUs That Can Increase Work Productivity By 30% (W...

Wednesday May 28, 2025

-

06

[10 BEST] AI Influencer Generator Apps Trending Right Now

Monday March 17, 2025

-

07

The 10 Best Companies Providing Electric Fencing For Busines...

Tuesday March 11, 2025

-

08

Top 10 Social Security Fairness Act Benefits In 2025

Wednesday March 5, 2025

-

09

Top 10 AI Infrastructure Companies In The World

Tuesday February 11, 2025

-

10

What Are Top 10 Blood Thinners To Minimize Heart Disease?

Wednesday January 22, 2025