Even after implementing a powerful ERP like Odoo, many teams still collide with slow processes, complicated workflows, persistent manual steps, bottlenecks, and data imprecision. Traditional ERP systems automate processes based on rules, but today’s business environments require automation based on intelligence. Teams need systems that can think, learn, foreshow, and act, not just follow static workflows.

In this blog, you’ll assimilate how Odoo AI Integration compromises real operational challenges, what intelligent automation truly looks like, and how teams use it to decrease effort, ameliorate dependability, and accelerate innovation.

Why Odoo AI Integration Matters for High-Complexity Workflows

High-complexity workflows often necessitate multiple decision points, large data volumes, and tasks that demand stagnant adjustments, something traditional rule-based automation struggles to manage.

Odoo AI Integration adds intelligence to these sequences by analyzing patterns, forecasting needs, and automating decisions immediately. This not only decreases manual collaboration but also ensures workflow accuracy and consistency, even as operational requirements grow. Complex environments require:

- Real-time decisions

- Intelligent predictions

- Adaptive workflow behavior

- Consistent accuracy across large datasets

- Automation that learns from results

Odoo AI Integration introduces capabilities that rule-based automation cannot obtain. It adds intelligence to every module, CRM, inventory, accounting, manufacturing, HR, and copious.

The Limitations of Standard Odoo Automation

Standard Odoo automation works well for straightforward, rule-based tasks but struggles when workflows demand contextual decision-making or complicated logic. It cannot analyze patterns, foreshadow outcomes, or adapt when conditions change, which often leads to manual intervention. Before understanding the advantages of AI integration, it’s necessary to acknowledge Odoo’s native condensations:

1. Rule-based workflows without contextual logic

Rule-based workflows in Odoo only adhere to fixed triggers and circumstances, meaning they can’t understand context or calibrate to new situations. If any alterable changes, like customer behavior, inventory fluctuations, or undetermined delays, the system cannot adapt automatically.

2. No predictive abilities

Traditional Odoo workflows can observe predetermined tasks, but they can’t foresee what will happen next. Without forecasting capabilities, the system cannot presuppose demand, identify risks, or recommend effective steps before issues arise.

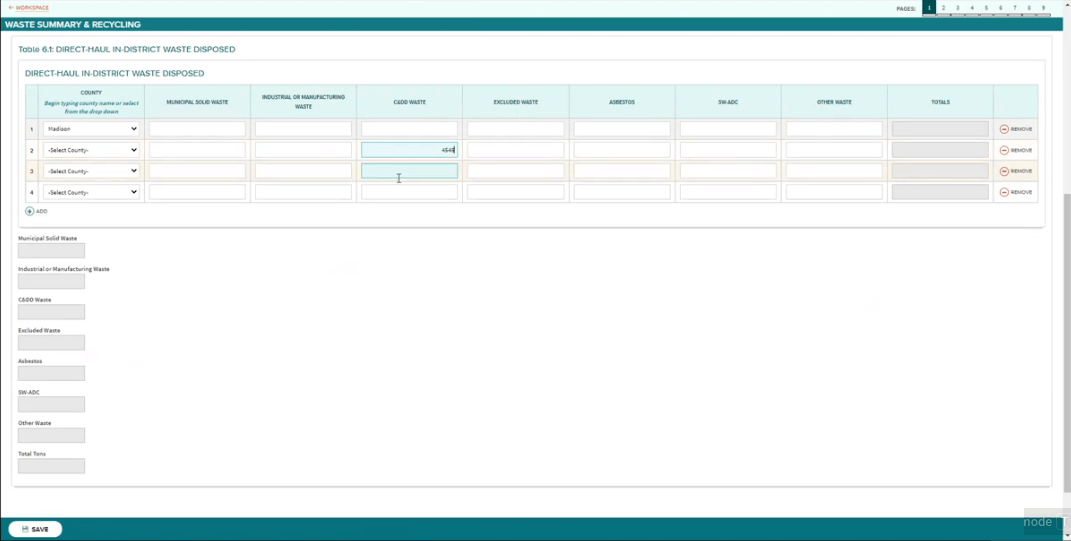

3. Heavy dependency on manual validation

Standard Odoo workflows still demand humans to review entries, verify data exactitude, and cross-check information before moving tasks forward. This manual endorsement slows down operations and enhancements the chance of human error, considerably when handling large volumes of data.

4. Difficulty handling multi-layered workflows

Multi-layered workflows demand conditional decisions, dynamic branching, and coordination across different modules, something standard Odoo automation isn’t built to superintend. When workflows necessitate various dependencies or exceptions, the system often becomes rigid and demands manual supervision to keep things moving.

Also read: Walmart Eye Center Review: Is It Worth The Money?How AI Fills These Gaps

Odoo AI Integration enables:

- Decision automation

- Predictive modeling

- Data-driven workflow adjustments

- Intelligent recommendations

- Automated insights generation

- Error detection and anomaly spotting

This shifts automation from being reactive → to proactive → to predictive.

How Odoo AI Integration Reduces Manual Effort at Scale

AI-enhanced Odoo workflows impressively reduce effective load through advanced automation. The following sections break down how AI transforms everyday tasks, enabling teams to focus on strategic work instead of uninteresting activities.

AI-Driven Data Processing and Validation

AI improves Odoo’s data handling by automatically discovering errors, cleaning inconsistencies, and validating entries with far greater exactitude than manual review. It learns from historical patterns to identify what “correct” data should look like, reducing the amount of time teams spend fixing records. AI automates:

- Duplicate detection

- Field prediction and autocomplete

- Cross-record validation

- Probability scoring for missing values

- Data cleansing based on patterns

This reduces manual data entry errors and ensures reliable datasets for decision-making.

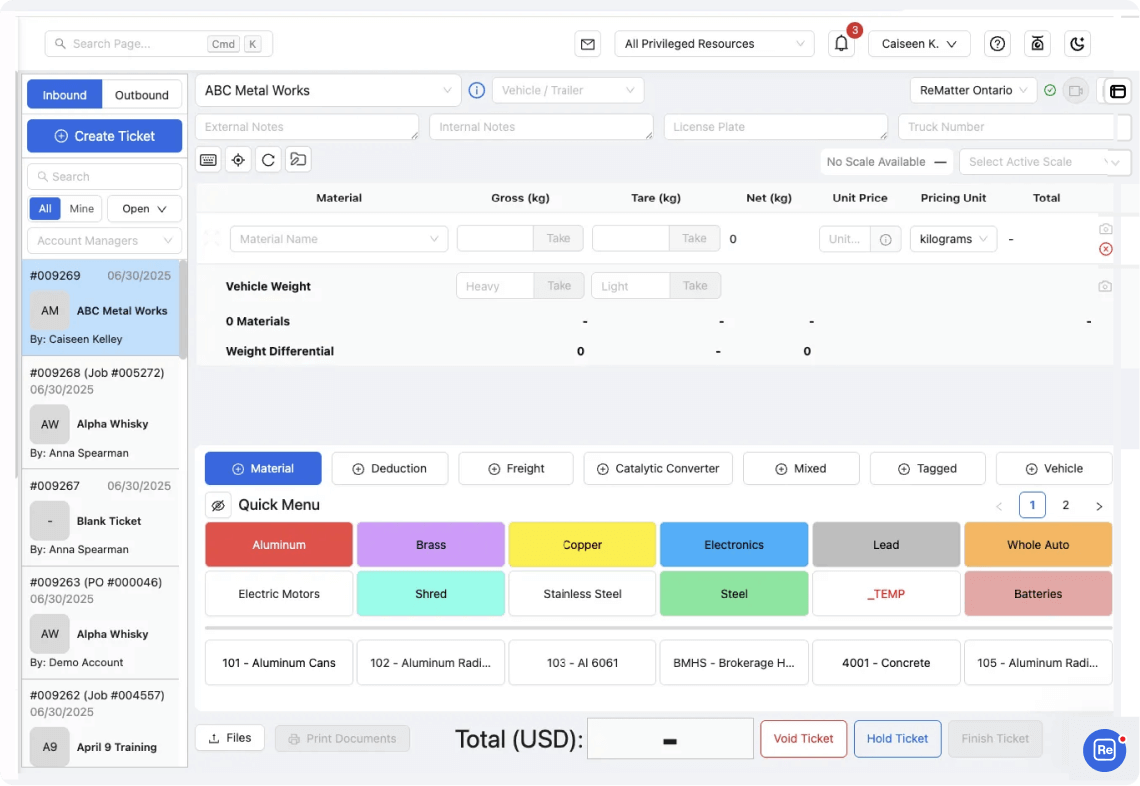

Automated Decision Making for Complex Workflows

AI enables Odoo to make smart, context-aware decisions that would normally necessitate human judgment, such as approvals, prioritization, or task routing. By analyzing real-time data and historical patterns, AI determines the best action without needing manual intervention.

Examples include:

- Auto-approving low-risk purchase orders

- Suggesting optimal reorder points

- Routing tickets based on sentiment

- Recommending lead actions

- Predicting workflow priority

Instead of waiting for human review, decisions happen instantly.

Also read: What Is Pokemon Sleep? The Pokemon App Will Put You To Sleep!Intelligent Workflow Optimization Through ML Models

ML models continually analyze how workflows perform inside Odoo and identify patterns that slow teams down. Based on this learning, AI recommends advancements, automates uninteresting steps, and accommodates processes to make them more efficient over time. As data grows, workflows evolve automatically:

- Identifying bottlenecks

- Detecting repetitive manual tasks

- Recommending new automations

- Highlighting inefficiencies

- Measuring workflow performance

This creates a self-optimizing Odoo environment.

Key Use Cases Where Odoo AI Integration Delivers Maximum Impact

Odoo AI Integration sparkles the most in areas where workflows depend on large datasets, repeated decision-making, and time-sensitive actions. From sales and inventory forecasting to accounting automation and manufacturing optimization, AI decreases manual effort and ameliorates accuracy at every step. These real-world applications show how intelligence transforms routine operations into high-efficiency workflows.

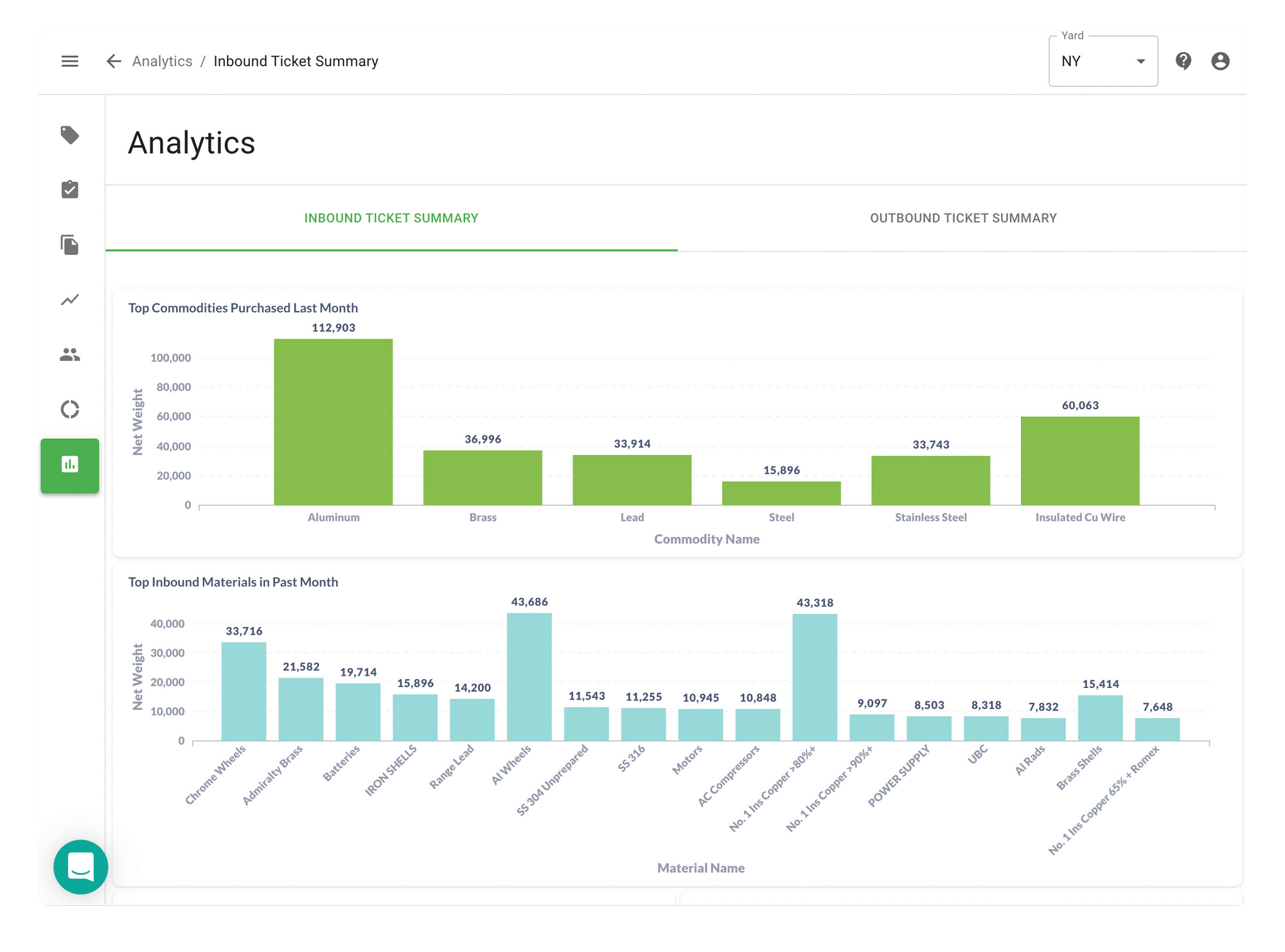

AI-Powered Lead Scoring and CRM Optimization

AI enhances Odoo’s CRM by automatically scoring leads based on experience, engagement patterns, and historical data, helping teams focus on the highest-quality prospects. AI solves this by:

- Scoring leads automatically

- Predicting conversion probability

- Ranking leads based on behavioral patterns

- Triggering automated follow-ups

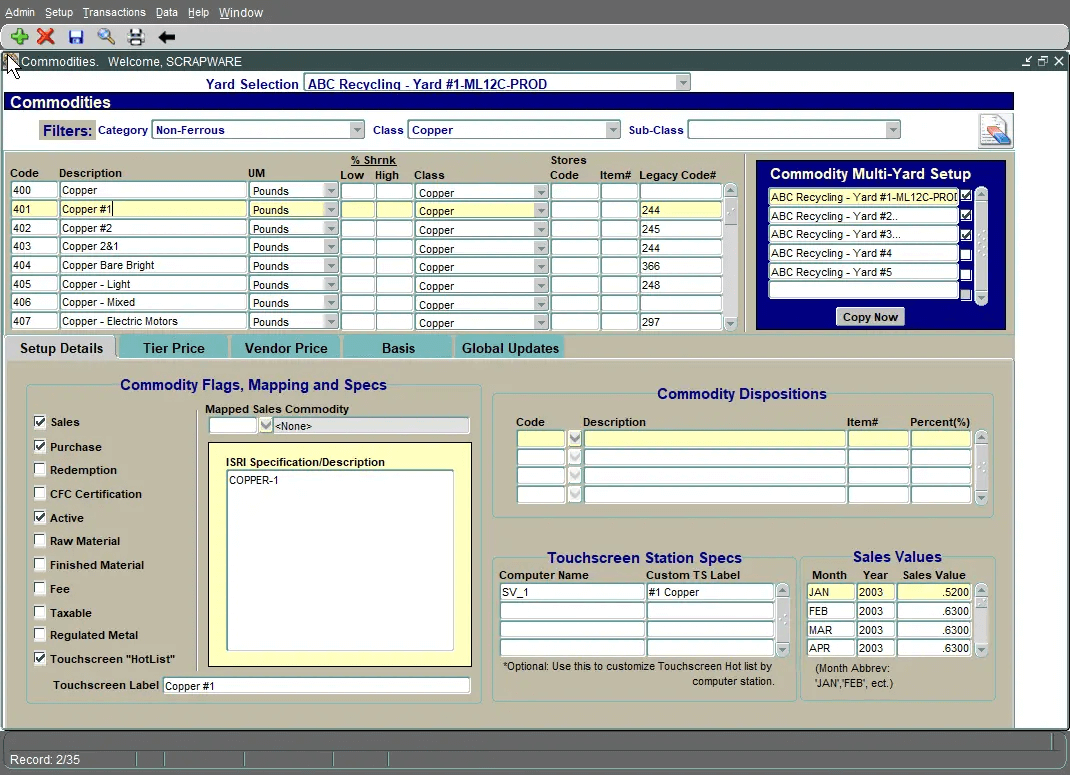

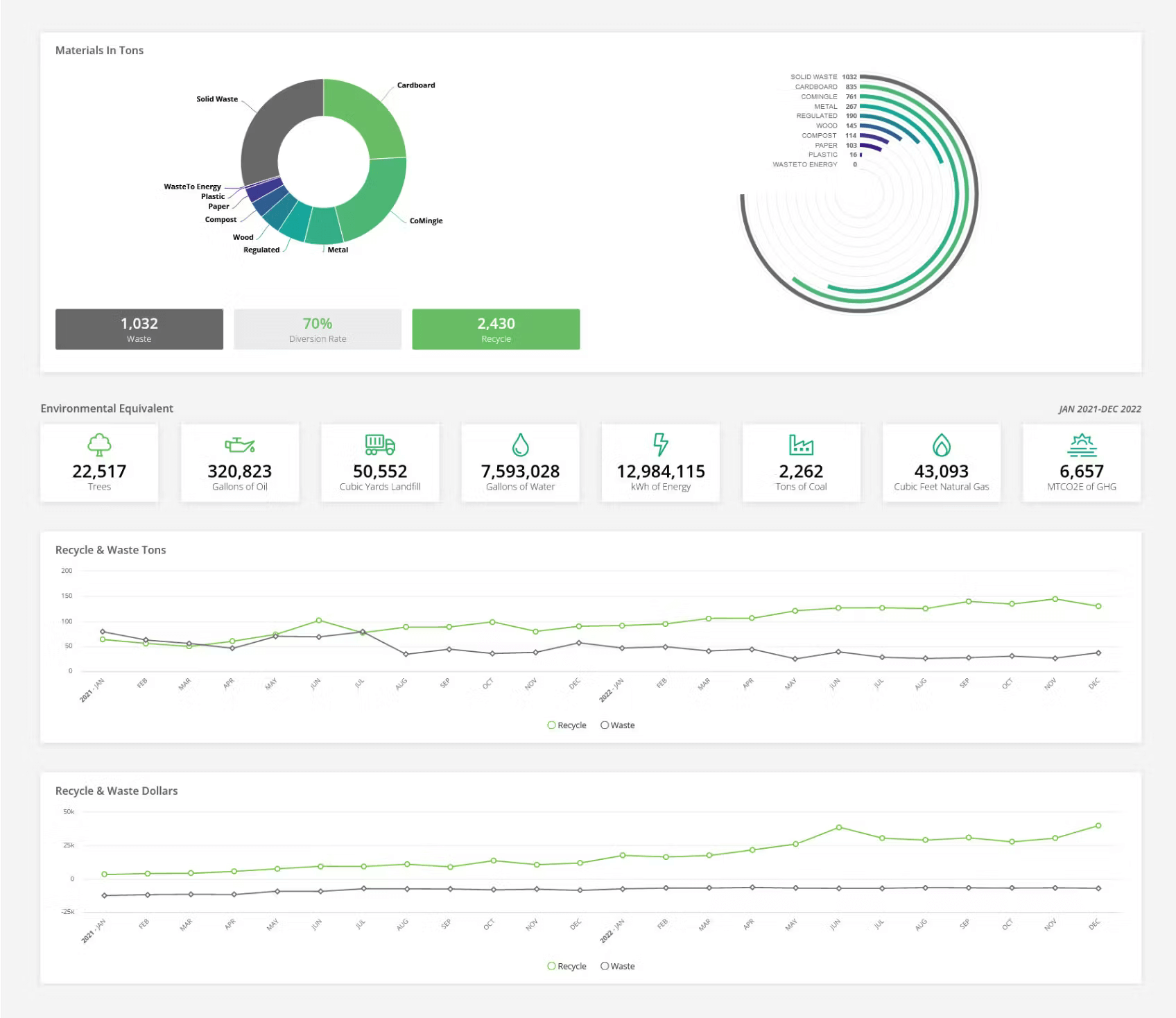

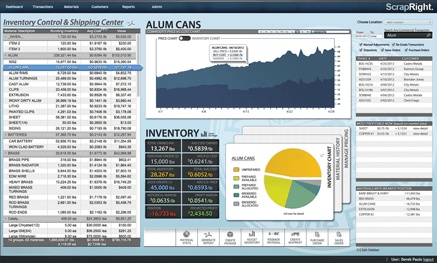

Predictive Inventory Management

AI transforms Odoo’s inventory module by prognosticating future requirements, identifying stock risks, and recommending excellent reorder points before decreases or overstocking occur. AI models forecast:

- Future demand

- Supplier delays

- Seasonal spikes

- Optimal stock levels

This eliminates manual calculations and prevents stockouts or overstocking.

Intelligent Accounting Automation

AI enhances Odoo’s accounting progression by automating uninteresting tasks like reconciliation, expenditure categorization, and invoice substantiation. It can discover anomalies or believable errors in real time, decreasing human oversight and minimizing financial mistakes. AI transforms accounting modules with:

- Automated reconciliation

- Fraud detection

- Predictive cash flow insights

- Smart document extraction

- Expense categorization

Manual number-crunching is replaced with precise automated workflows.

Also read: What Does “FedEx Shipment Exception” Status Mean? What To Do & How To Handle It?AI-Enhanced Manufacturing and MRP

AI integration in Odoo’s manufacturing module ameliorates production planning, forecasting, maintenance, and quality control by analyzing historical and real-time data. Manufacturing benefits through:

- Predictive maintenance

- Production scheduling optimization

- Quality control automation

- Resource utilization insights

- Anomaly detection

Downtime and human intervention drop significantly.

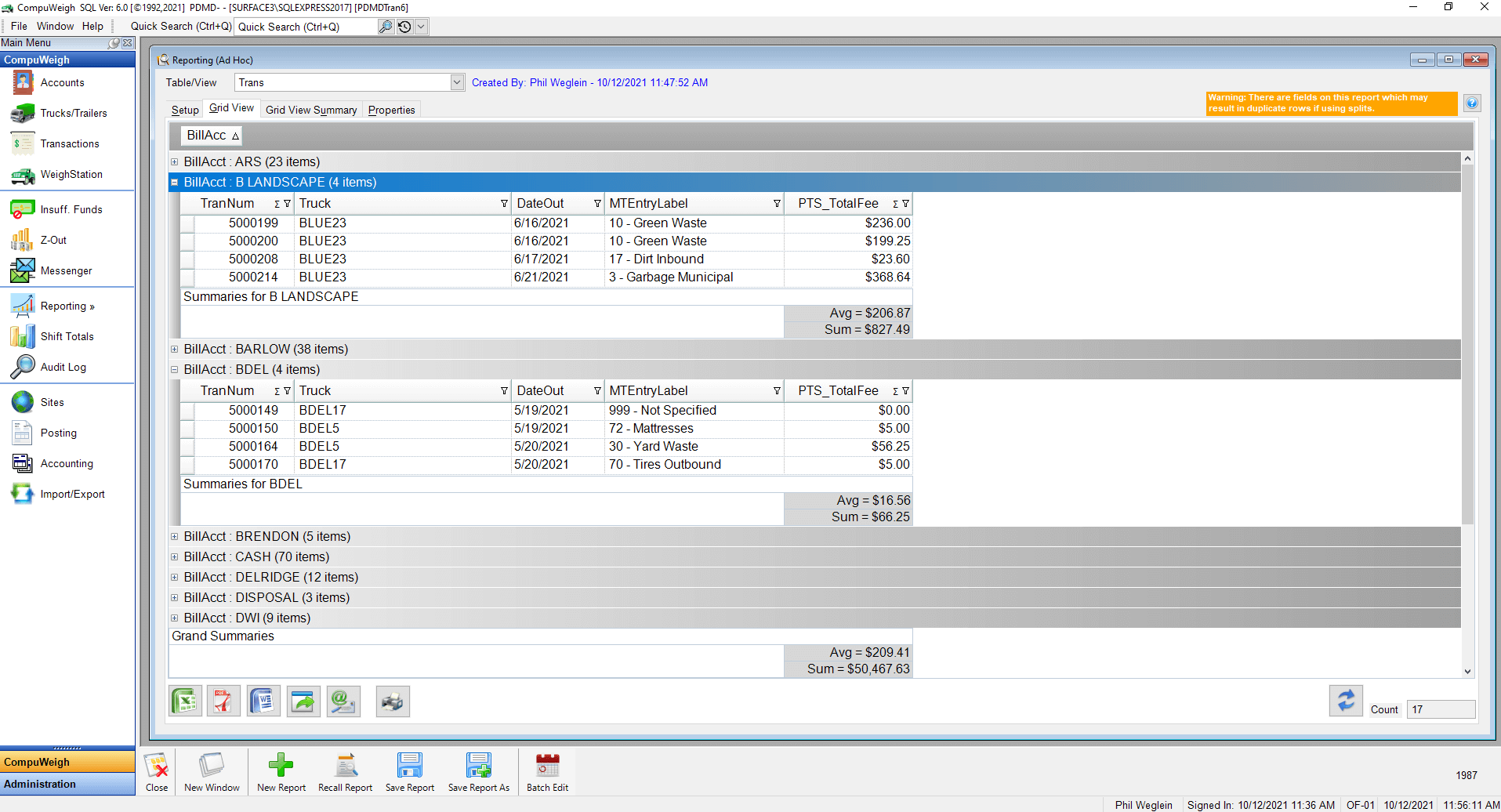

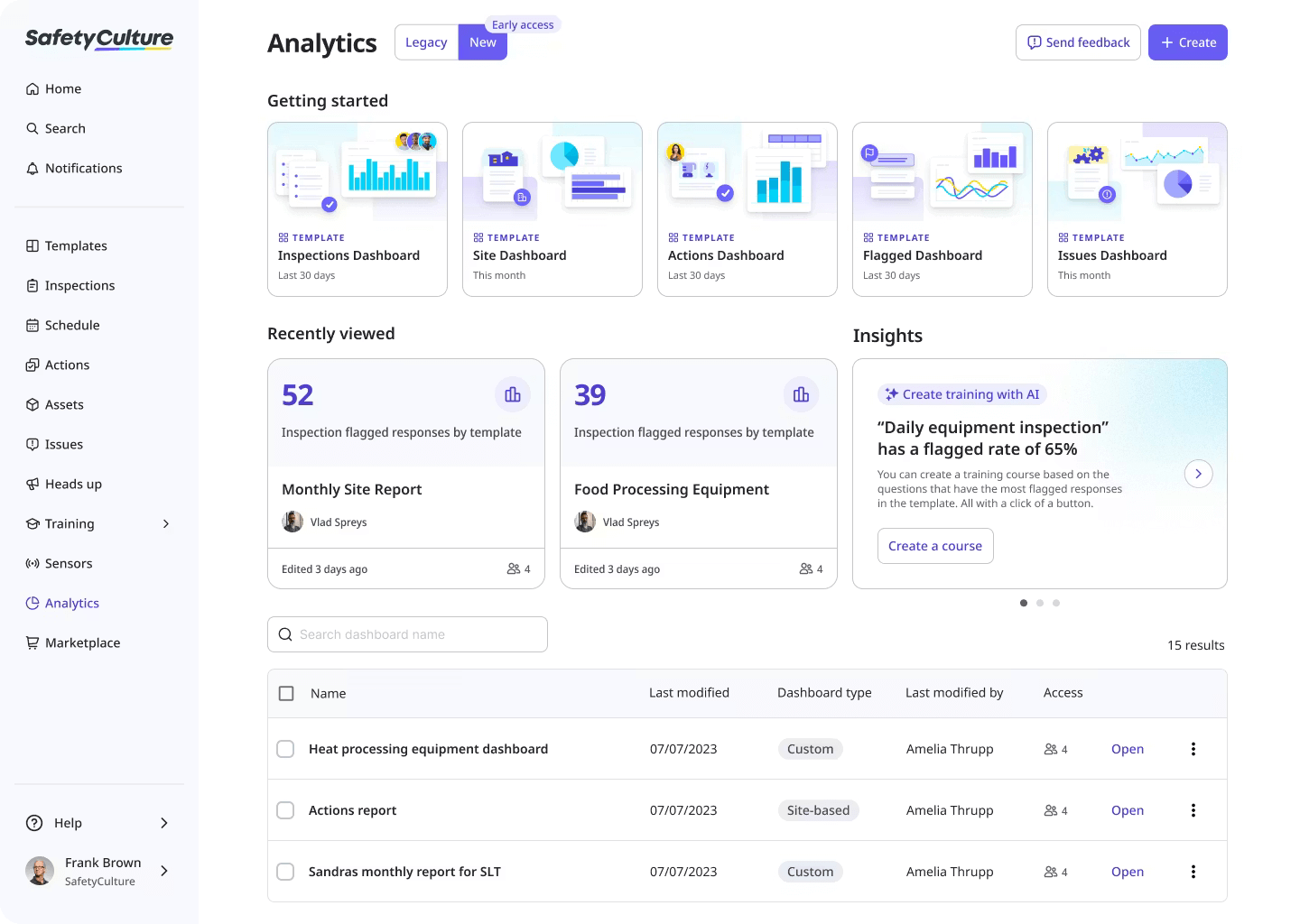

Automated Reporting and Predictive Analytics

AI in Odoo automates the generation of reports and delivers forecasting insights, turning raw data into actionable intelligence. It identifies trends, predictions outcomes, and highlights believable issues before they arise, decreasing the need for man

ual analysis. AI auto-generates reports such as:

- Sales performance predictions

- Employee productivity modeling

- Risk alerts

- Operational dashboards

- Workflow health metrics

Teams no longer spend hours manually compiling reports.

Also read: What Is Cognition’s New AI-Software “Devin AI” All About? (Complete Guide)How Odoo AI Integration Improves Speed, Accuracy, and Insights

Odoo AI Integration improves operational efficiency by automating routine tasks, decreasing human errors, and delivering effective insights in real time. AI-driven workflows accelerate decision-making, ensuring processes are accomplished faster without compromising accuracy. AI not only reduces manual work, but it also improves operational intelligence.

Faster Execution Through Real-Time Decision Engines

AI-powered definition engines in Odoo analyze data immediately and trigger automated actions based on existing conditions. AI engines inside Odoo make:

- On-the-spot approvals

- Instant routing

- Automated prioritization

- Live adjustments to tasks

This drastically speeds up operations.

Higher Accuracy Through Intelligent Error Detection

AI in Odoo successively monitors data and workflow processes to identify incompatibility, anomalies, or believable errors automatically. AI models automatically detect anomalies such as:

- Outliers in financial transactions

- Unusual stock movement

- Incorrect entries

- Fraud patterns

- Inconsistent data entries

Human oversight becomes lighter and more strategic.

Also read: Top 7 Industrial Robotics Companies in the worldHow Teams Can Implement Odoo AI Integration Effectively

Successfully integrating AI into Odoo demands a structured perspective that aligns technology with business needs. Teams should start by identifying high-impact workflows, selecting the right AI models, and mapping processes for seamless integration. Integrating AI is not just a technical decision; it requires operational alignment.

Step 1 – Identify High-Impact Manual Workflows

The first step in implementing Odoo AI Integration is pinpointing workflows that consume the most time or are susceptible to errors. Start where automation delivers the considerable efficiency gain:

- Repetitive tasks

- Data-heavy processes

- High-risk activities

- Decision-based workflows

- Time-consuming reports

Step 2 – Choose AI Models Based on Business Goals

After identifying disparaging workflows, select AI models that align with your organization’s aspirations, such as prognosticating, classification, or natural language processing. Examples of useful models:

- Classification models (lead scoring, fraud detection)

- Regression models (forecasting, cost estimation)

- NLP models (ticket analysis, sentiment scoring)

- Clustering models (customer segmentation)

Step 3 – Integrate AI With Odoo Modules

Once the right AI models are selected, they need to be effortlessly connected with appropriate Odoo modules such as CRM, inventory, or accounting. This involves:

- API connections

- Real-time data syncing

- Workflow mapping

- Automated triggers

- Feedback loops for continuous learning

Step 4 – Measure AI Performance and Optimize Continuously

After integrating AI into Odoo, it’s necessary to monitor its performance consistently by tracking metrics such as automation achievement rate, error reduction, and workflow competence. Monitor:

- Automation success rate

- Time saved

- Error reduction

- Workflow execution speed

- Business impact

AI improves exponentially with usage.

Also read: [10 Best] Blog To Video AI Free (Without Watermark)Conclusion

Odoo AI Integration marks a shift from conventional rule-based automation to intelligent, data-driven workflows that continuously improve over time. By reducing manual effort, expanding accuracy, and increasing sophisticated decision-making, AI transforms Odoo into a smarter, more adaptable ecosystem. Teams that embrace AI-powered automation benefit faster from implementing, deeper insights, and a future-ready operational advantage.

FAQs with Odoo AI Integration

How does Odoo AI Integration reduce the need for manual work?

AI automates data processing, decision-making, and repetitive tasks, minimizing human involvement.

Can AI improve Odoo’s data accuracy?

Yes. AI performs validation, error detection, and cleansing to enhance data reliability.

Which workflows benefit most from Odoo AI Integration?

CRM, accounting, inventory forecasting, manufacturing planning, and customer support gain the highest value.

Do teams need advanced technical knowledge to use AI with Odoo?

No. Once integrated, AI runs automatically, and teams interact through simple workflows and dashboards.

What are the long-term benefits of integrating AI with Odoo?

Improved workflow efficiency, higher accuracy, predictive insights, and scalable operations.